1. User space

mmap, munmap - map or unmap files or devices into memory.

将文件或者设备与内存映射起来。

1 |

|

2. Kernel space

2.1. 静态映射

start_kernel() ->

setup_arch() ->

paging_init() ->

devicemaps_init(const struct machine_desc *mdesc) ->

mdesc->map_io()

涉及到的结构体struct machine_desc1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29struct machine_desc {

unsigned int nr; /* architecture number */

const char *name; /* architecture

unsigned int nr_irqs; /* number of IRQs */

phys_addr_t dma_zone_size; /* size of DMA-able area */

void (*init_meminfo)(void);

void (*reserve)(void);/* reserve mem blocks */

void (*map_io)(void);/* IO mapping function */

void (*init_early)(void);

void (*init_irq)(void);

void (*init_time)(void);

void (*init_machine)(void);

void (*init_late)(void);

void (*handle_irq)(struct pt_regs *);

void (*restart)(enum reboot_mode, const char *);

};

struct map_desc {

unsigned long virtual;

unsigned long pfn;

unsigned long length;

unsigned int type;

};

例如我们在arch/arm/mach-s3c24xx/mach-smdk2440.c

smdk2440_map_io() ->

iotable_init(mach_desc, size)

1 | static struct map_desc smdk2440_iodesc[] __initdata = { |

在这一步定义了物理与虚拟地址之间的映射关系,一旦编译完成就不会修改, 静态编译常用于不容易变动的物理地址与虚拟地址之间的映射,例如寄存器的映射。

2.2. 页表创建

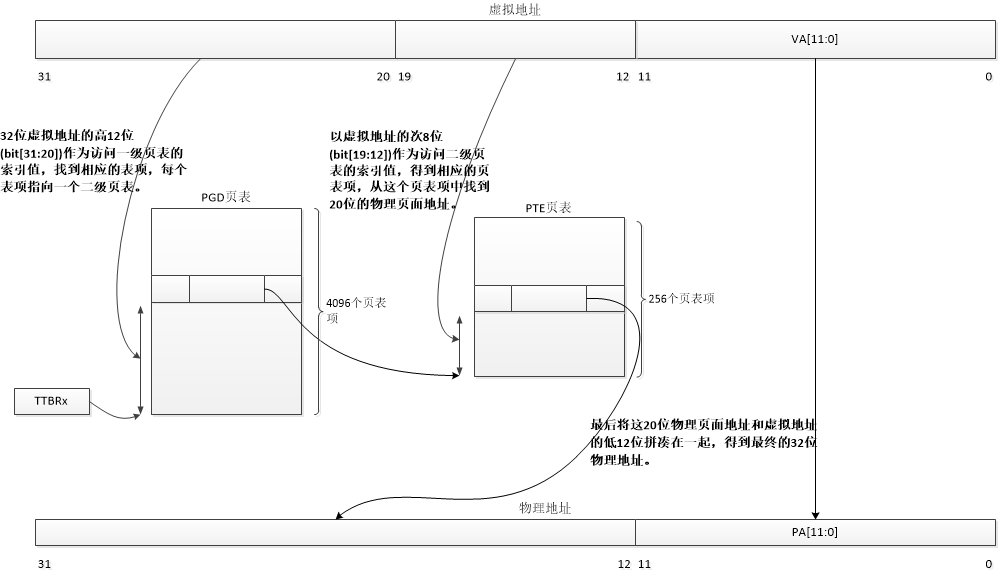

Linux下的页表映射分为两种,一是Linux自身的页表映射,另一种是ARM32 MMU硬件的映射。这样是为了更大的灵活性,可以映射Linux bits 到 硬件tables 上,例如有YOUNG, DIRTY bits.

参见 linux/arch/arm/include/asm/pagetable-2level.h 注释:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

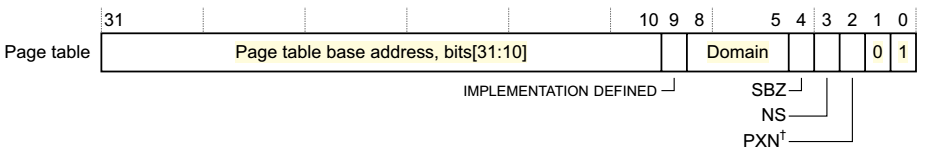

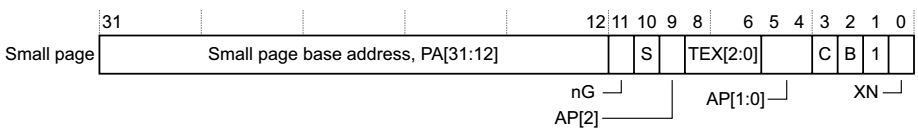

35/*

* Hardware-wise, we have a two level page table structure, where the first

* level has 4096 entries, and the second level has 256 entries. Each entry

* is one 32-bit word. Most of the bits in the second level entry are used

* by hardware, and there aren't any "accessed" and "dirty" bits.

*

* Linux on the other hand has a three level page table structure, which can

* be wrapped to fit a two level page table structure easily - using the PGD

* and PTE only. However, Linux also expects one "PTE" table per page, and

* at least a "dirty" bit.

*

* Therefore, we tweak the implementation slightly - we tell Linux that we

* have 2048 entries in the first level, each of which is 8 bytes (iow, two

* hardware pointers to the second level.) The second level contains two

* hardware PTE tables arranged contiguously, preceded by Linux versions

* which contain the state information Linux needs. We, therefore, end up

* with 512 entries in the "PTE" level.

*

* This leads to the page tables having the following layout:

*

* pgd pte

* | |

* +--------+

* | | +------------+ +0

* +- - - - + | Linux pt 0 |

* | | +------------+ +1024

* +--------+ +0 | Linux pt 1 |

* | |-----> +------------+ +2048

* +- - - - + +4 | h/w pt 0 |

* | |-----> +------------+ +3072

* +--------+ +8 | h/w pt 1 |

* | | +------------+ +4096

*

* See L_PTE_xxx below for definitions of bits in the "Linux pt", and

* PTE_xxx for definitions of bits appearing in the "h/w pt".

32bit的Linux采用三级映射:PGD–>PMD–>PTE,64bit的Linux采用四级映射:PGD–>PUD–>PMD–>PTE,多了个PUD

PGD - Page Global Directory

PUD - Page Upper Directory

PMD - Page Middle Directory

PTE - Page Table Entry。

在ARM32 Linux采用两层映射,省略了PMD,除非在定义了CONFIG_ARM_LPAE才会使用3级映射。

更多可以参看<

iotable_init()->

create_mapping()->

alloc_init_pud()->

alloc_init_pmd()->

alloc_init_pte()->

set_pte_ext->

cpu_set_pte_ext()->

cpu_v7_set_pte_ext() (linux/arm/arm/mm/proc-v7-2level.S)

1 | void __init create_mapping(struct map_desc *md) |

set_pte_ext()函数,根据配置情况最终指向的是cpu_v7_set_pte_ext()1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46/*

* cpu_v7_set_pte_ext(ptep, pte)

*

* Set a level 2 translation table entry.

*

* - ptep - pointer to level 2 translation table entry

* (hardware version is stored at +2048 bytes)

* - pte - PTE value to store

* - ext - value for extended PTE bits

*/

ENTRY(cpu_v7_set_pte_ext)

str r1, [r0] @ linux version

bic r3, r1, #0x000003f0

bic r3, r3, #PTE_TYPE_MASK

orr r3, r3, r2

orr r3, r3, #PTE_EXT_AP0 | 2

tst r1, #1 << 4

orrne r3, r3, #PTE_EXT_TEX(1)

eor r1, r1, #L_PTE_DIRTY

tst r1, #L_PTE_RDONLY | L_PTE_DIRTY

orrne r3, r3, #PTE_EXT_APX

tst r1, #L_PTE_USER

orrne r3, r3, #PTE_EXT_AP1

tst r1, #L_PTE_XN

orrne r3, r3, #PTE_EXT_XN

tst r1, #L_PTE_YOUNG

tstne r1, #L_PTE_VALID

eorne r1, r1, #L_PTE_NONE

tstne r1, #L_PTE_NONE

moveq r3, #0

ARM( str r3, [r0, #2048]! )

THUMB( add r0, r0, #2048 )

THUMB( str r3, [r0] )

ALT_SMP(W(nop))

ALT_UP (mcr p15, 0, r0, c7, c10, 1) @ flush_pte

bx lr

ENDPROC(cpu_v7_set_pte_ext)

2.3. 动态映射

2.3.1. virtual memory data struct

linux/include/linux/mm_types.h

1 | struct mm_struct { |

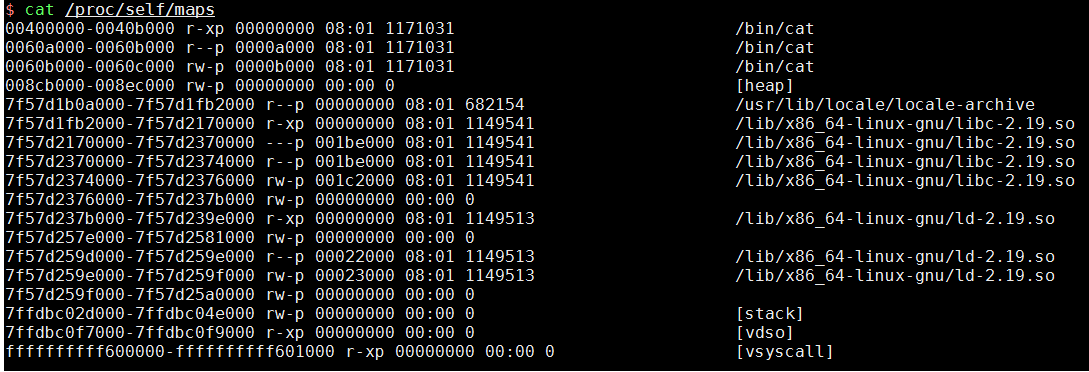

我们在kernel 中想要访问当前进程的mm_struct,可以使用current1

struct mm_struct *mm = current->mm;

另外,我们可以访问 /proc/

| 字段 | 含义 |

|---|---|

| 00400000-0040b000 | vma->vm_start ~ vma->vm_end |

| r-xp | vma->vm_flags |

| 00000000 | vma->vm_pgoff |

| 08:01 | 主从设备号 |

| 1171031 | 设备节点inode 值 |

| bin/cat | 设备节点名字 |

2.3.2. memory mapping

在使用high mem addr时,我们需要如下函数:1

2

3

4

5void *kmap(struct page * page)

void kunmap(struct page *page)

void *kmap_atomic(struct page * page)

void kumap_atomic(struct page *page)

如果* page 是low mem addr 直接返回,反之,是high mem addr kmap()在内核专用的空间创建特殊的映射。kmap() 可能会睡眠,而kmap_atomic() 会进行原子操作,不允许sleep.

kernel 映射函数 remap_pfn_range()

注意:

如果使用的high mem, vmalloc() 分配的空间,我们只能PAGE_SIZE 的进行映射,他们本身逻辑连续,而物理地址非连续。

1 | int remap_pfn_range(struct vm_area_struct *vma, unsigned long addr, |

调用关系如下:

remap_pfn_range()->

remap_pud_range()->

remap_pmd_range()->

remap_pte_range()

1 | /* |

可以看到与上面的静态映射其实也是差不多的,最终也会调用到set_pte_ext() -> cpu_v7_set_pte_ext() 函数。

因此,映射的本质都是重新建立页表2-level 或 3-level,并刷新页表

2.3.3. io memory mapping

ioremap将一个IO地址空间映射到内核的虚拟地址空间上去,便于访问。ioremap 百度百科

1 |

ioremap()->

arm_ioremap()->

arm_ioremap_caller()->

arm_ioremap_pfn_caller()->

ioremap_page_range() ->__

ioremap_page_range()->

ioremap_pud_range()->

ioremap_pmd_range()->

ioremap_pte_range()->

set_pte_at()->

set_pte_ext()->

cpu_set_pte_ext()->

cpu_v7_set_pte_ext() (linux/arm/arm/mm/proc-v7-2level.S)

可以看见,大致与上面的静态映射,动态映射是相同的, 只是不同点在于__arm_ioremap_pfn_caller() 函数中调用get_vm_area_caller()进行vm_area 空间的申请。

1 | void __iomem * __arm_ioremap_pfn_caller(unsigned long pfn, |

get_vm_area_caller()函数可以看见是从 VMALLOC_START ~ VMALLOC_END区域内分配一个空间。

注:

由于我们的ioremap()是将IO 连续的物理地址映射成Kernel 能访问的虚拟地址, 所以本身物理地址的连续性是能保证的。vmalloc.c 中的核心函数get_vm_area_caller()详细分析可见最后的参看资料。1

2

3

4

5

6struct vm_struct *get_vm_area_caller(unsigned long size, unsigned long flags,

const void *caller)

{

return __get_vm_area_node(size, 1, flags, VMALLOC_START, VMALLOC_END,

NUMA_NO_NODE, GFP_KERNEL, caller);

}

1 | static int ioremap_pte_range(pmd_t *pmd, unsigned long addr, |

io_remap_page_range() 如果没有定义,则该函数是等效于remap_pfn_range()1

2

3

2.3.4. dma memory mapping

参见之前文章 -> DMA memory mapping

参看资料

basic

虚拟地址映射机制–动态、静态

memory mapping

内存映射函数remap_pfn_range学习——示例分析(1)

内存映射函数remap_pfn_range学习——示例分析(2)

内存映射函数remap_pfn_range学习——代码分析(3)

ioremap

Linux 字符设备驱动开发基础(五)—— ioremap() 函数解析

vmalloc

Linux高端内存映射(下)